By Joanne Kane, PhD

In May 2017, I found myself nervously taking the podium in sunny San Diego at NCBE’s Annual Bar Admissions Conference to give a plenary presentation on Multistate Bar Examination (MBE) trends. I was there to discuss a “downer” of a subject: a long-term decline in MBE scores.

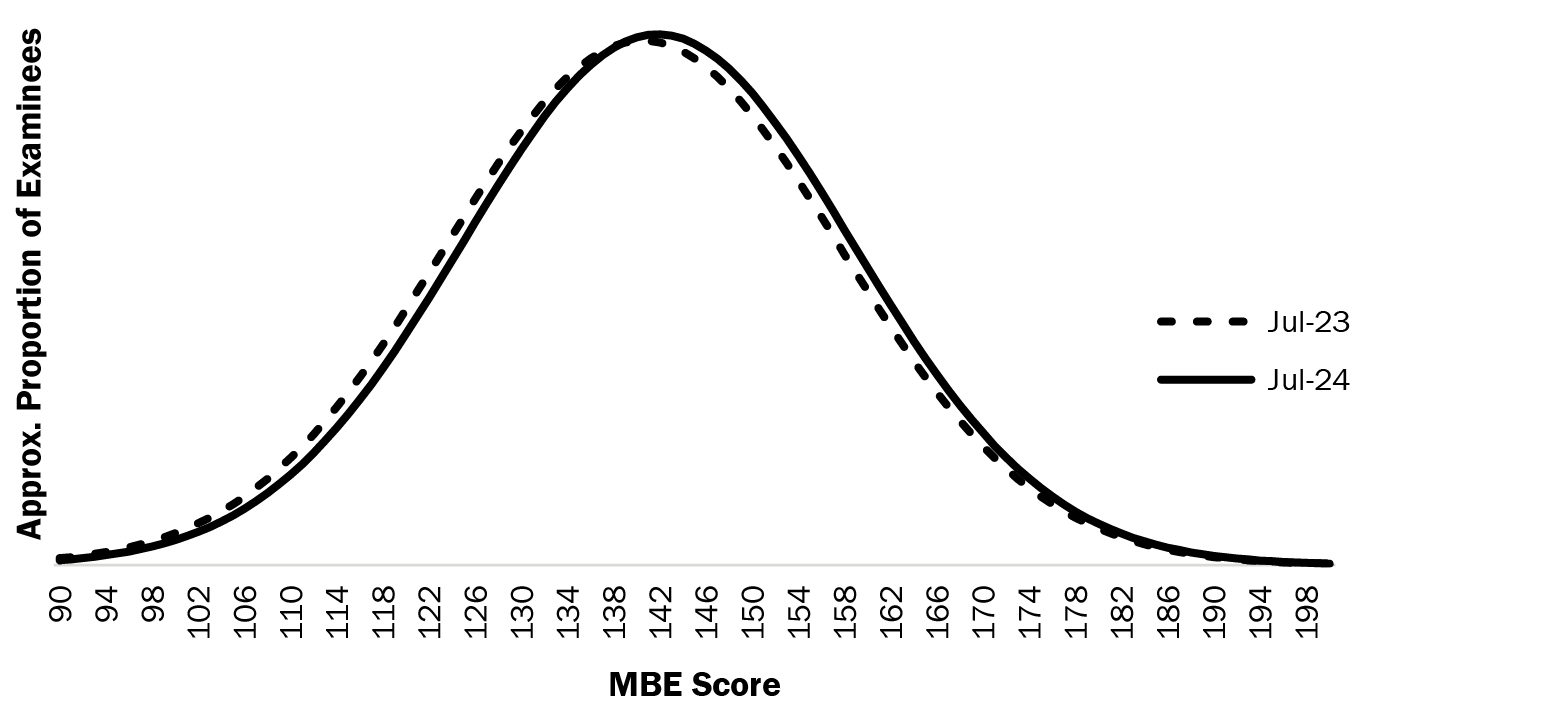

Today I find myself in snowy Wisconsin describing the reverse trend: an increase in MBE scores observed in July 2024. The average MBE score for this administration was 141.8, which is higher by about 1.3 points than the average score in July 2023 (140.5). When the fall 2020 paper-based administrations are excluded, the average MBE score for July 2024 is the highest in the last decade; the second-highest average was 141.7 in July 2017 (the first administration after my presentation on declining scores!).

A 1.3-point difference may not sound like much. And in Figure 1, the difference does not look like much—the smoothed dotted line representing July 2023 MBE performance looks very similar to the smoothed solid line representing July 2024 MBE performance. However, the difference is not only statistically significant but also substantively significant—which is to say, meaningful in the real world as well.

We can have confidence that the difference is real and meaningful because the test scores were earned on a highly reliable exam where scores are equated and because the number of candidates taking each July test is quite large; these facts give us strong assurance that the differences observed reflect differences in examinee performance rather than plausibly being attributed to extraneous factors such as unfair differences in test form difficulty or content.1

Figure 1: Smoothed MBE distributions for July 2023 and July 2024 based on mean and standard deviation

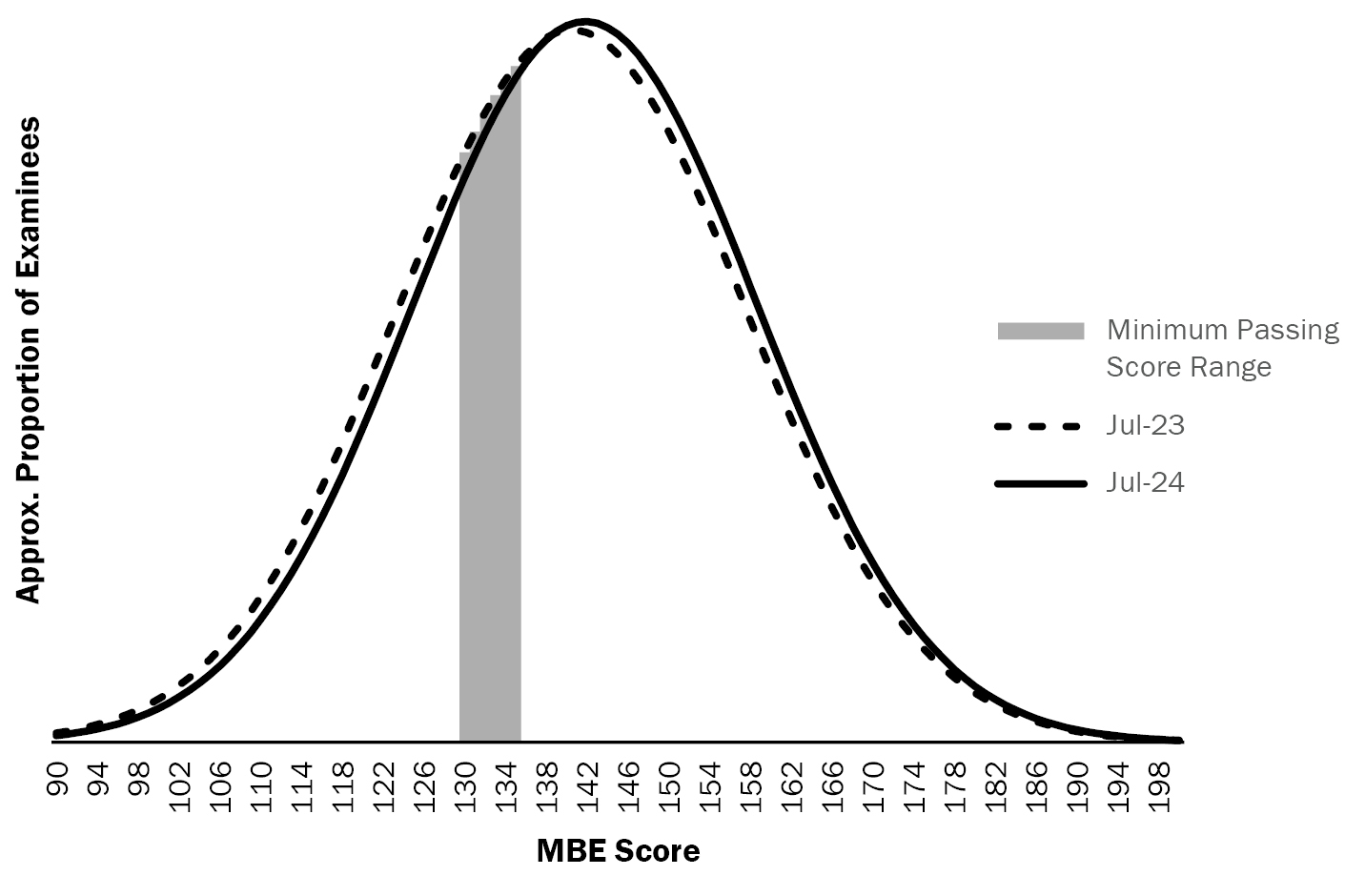

Note that most examinees score near the center of the distributions where the average scores fall, and the range of minimum passing Uniform Bar Examination (UBE) scores jurisdictions currently use (halved here to translate to the MBE scale) is also not so far from the center or peak of the distributions (see Figure 2). This pattern means that small changes to the minimum passing score can have a big impact on the pass rates, as can “small” changes to the shape and/or precise location of the distributions.2

For example, if we shifted the (smoothed) July 2023 distribution to the right by adding one scaled score point to each score, the national pass rate would theoretically3 increase from about 64%4 to about 66%. Similarly, if we shifted the July 2023 distribution two points to the right the national pass rate would theoretically increase from about 64% to 68%. We can think of the 1.3-point increase from July 2023 to July 2024 as corresponding with an increase in the national pass rate of roughly 3%.5 Note that we focus here on national means. The same general principles would apply with jurisdiction-based data, though the smaller the jurisdiction the more noise we would expect in the distributions and, as a result, the more variable the pass rates could be.

Stakeholders who follow MBE score trends and/or bar passage rates are often as interested in explaining why a mean and/or distribution changed as they are in predicting where the scores will be in the future. The trouble is that “prediction is difficult, especially about the future.”6

In my 2017 presentation, I discussed some predictors that seemed to influence average MBE scores. These were based on work conducted by Mark A. Albanese, PhD, and others. In a 2015 Bar Examiner article, Dr. Albanese noted that there was a relationship between average performance in an administration and the share of first-time versus repeat test takers in that administration; first-time takers consistently scored better on average than repeat takers.7 He also observed a relationship between MBE scores and factors we might describe as associated with “demand” (including the number of law school applicants, number of applicants per available law school seat, law school enrollees, and Credential Assembly Service [CAS] registrations). Albanese also discussed the relationship between Law School Admission Test (LSAT) scores and MBE scores over time, though he explained that a change in LSAT reporting criteria and policy over time had made it somewhat more complex to study that relationship.

Figure 2: Smoothed MBE distributions for July 2023 and July 2024 based on mean and standard deviation, with minimum passing score range highlighted

A follow-up piece8 extended these models, reporting on many of the same factors to help make sense of the July 2019 average: the number of LSATs administered and average LSAT score at the 25th percentile, both lagged to reflect the current MBE-takers’ class; likely first-time takers versus likely repeaters; and the total number of examinees.

We cannot precisely not know the total number of test takers until an administration has occurred. (Though trends in exam orders or the rosters jurisdictions upload in advance of an administration can give us hints.) Similarly, we cannot know the share of first-time takers until after a given test.

Law School Admission Council (LSAC) data can help us forecast MBE or UBE results to some degree. LSAC’s historical test-taker, applicant, and matriculant counts all spiked in 2021 relative to other years in the 10 years 2015–2024.9 To the extent that LSAC data continues to be a meaningful indicator of later bar exam performance, this trend suggests that we might be seeing particularly strong performance on the bar exam now due to the performance of the class matriculating in 2021. The returns in 2022 and 2023 of takers, applicants, and matriculants to levels observed immediately pre-COVID could similarly portend a return of next July’s MBE average to that of July 2023.

We can additionally look to MPRE data, as a previous Bar Examiner article notes, when trying to predict MBE or bar exam performance.10 Trends in MPRE average scores would predict relative stability or perhaps a slight decrease on average for July 2025. The average performance for November 2024 was down relative to the November 2023 performance, though the other two 2024 administrations (March and August) both showed stronger national performance as compared with the parallel administration in 2023.

But perhaps even those very vague predictions will be wrong! It is challenging in the clearest and most stable of times to separate the signal from the noise. Individual scores and means can fluctuate even in periods of relative stability for all sorts of reasons or combinations (or interactions) of those reasons.

And it is becoming more difficult than ever before as more law schools and the American Bar Association adopt test-optional policies and/or allow other exams (e.g., the Graduate Record Examinations [GRE]) to be considered during the admissions process. This is not to critique these policies but to make the point that as the number of options available to people seeking admission to law schools or seeking status as a licensed attorney increases, it becomes more challenging to study the impact of any single pathway primarily because people self-select into pathways in ways that may not be well understood or easily modeled.

Even within a set pathway, opportunities and possibilities are expanding. For example, Arizona offers candidates the opportunity to sit for a bar examination while they are still in law school, allowing them to be ready to practice upon graduation.11 In such jurisdictions, candidates who would previously have been most likely to test in July could shift to testing in February. This could complicate administration-to-administration comparisons over time.

The models also do not (and, realistically, often cannot) account for rare, societal-level events. Interestingly, the two highest MBE means recorded since at least the 1980s are from July 2008 and fall 2020: the former was the height of the 2008 financial crisis, though the crisis’s full effect in terms of law school graduates’ employment rates would not be felt for a few more years.12 And the latter was arguably the height of the COVID pandemic in the United States. Explanations for why people would, on average, perform better at times of greatest uncertainty could include differences in motivation or differences in competing priorities.

This will all become even more complex going forward with the NextGen bar exam and the creation of additional jurisdiction-specific bar exams. New pathways to licensure that do not require the MBE or any other licensure examination add additional complexity.

What could this all mean? MBE scores could tend to decrease as new pathways become available. If nonexamination pathways are most attractive and/or most available to candidates who would score near the top of their jurisdiction’s distribution had they tested, removing their scores from the sample would lower the mean (compared to what it theoretically would have been had they tested and compared perhaps to administrations prior to the policy change).

However, the opposite could be true: MBE scores should increase when a new nonexamination pathway is offered if it is most attractive to candidates who would score particularly low on the MBE. Removing them from the scoring pool would be expected to result in a mean increase.

Finally, the average MBE score could remain stable. If new pathways attract people across a broad spectrum in would-be MBE performance, no significant change may result.

In UBE contexts, the average MBE score has been not only a predictor of bar exam pass rates but a driver of them. However, as new licensure pathways emerge within UBE jurisdictions in particular, the MBE average (or, more broadly, the average earned on any scored component of any pathway) and the pass rates (if reported across pathways) could potentially decouple depending on jurisdiction reporting policies. If prospective lawyers pursuing pathways to licensure other than the traditional bar exam are included in a jurisdiction’s passage statistics but are not included in the MBE average (and/or the average reported for any other scored component of one or more pathways), for example, predicting outcomes will be that much more challenging.

Again, this is offered not as a critique but as a fact-based observation. And these predictions are just that: forecasts. Just as weather forecasts for a given day or week can be wrong, forecasts of scores or score trends could be wrong. It is usually impossible to know whether any single datapoint represents a blip, a continuation of a trend, or the start of a new long-term trajectory. That said, just as I can be confident that the weather in Wisconsin in winter will be sufficiently cold that it is worth investing in high-quality mittens, I remain confident we will continue to see patterns in the near future that we have observed consistently over time: July scores and pass rates, on average, will remain higher than February ones. Scores and pass rates among first-time takers as a group will, on average, remain higher than those for repeaters as a group. However, the real proof will be in the annual statistics section of the next issue of The Bar Examiner—stay tuned!

Notes

- A future Testing Column will explore some hypothetical extraneous factors in more depth. (Go back)

- Michael T. Kane, PhD, and Joanne Kane, PhD, “Standard Setting 101: Background and Basics for the Bar Admissions Community,” 87(3) The Bar Examiner 9–17 (Fall 2018). (Go back)

- Note: pass rates within a jurisdiction are not directly a function of the MBE. Pass rates additionally depend on local policies and requirements. The local passing score matters a lot. For purposes of this piece I am using 135/270, which is currently the modal, or most frequently adopted, passing score in the country and also the highest passing score in US jurisdictions. (Go back)

- Note: the reported pass rate for all jurisdictions for the July 2023 exam was 66%. “Persons Taking and Passing the 2023 Bar Examination. (Go back)

- Again, this analysis is based on a single passing score of 135/270, which is not reflective of all UBE jurisdictions and is used here because it is the modal score. (Go back)

- Daniel P. Dickstein, “Editorial: It’s Difficult to Make Predictions, Especially About the Future: Risk Calculators Come of Age in Child Psychiatry,” 60(8) Journal of the American Academy of Child and Adolescent Psychiatry 950–951 (August 2021). (Go back)

- See, for example, Mark A. Albanese, PhD, “The Testing Column: The July 2014 MBE: Rogue Wave or Storm Surge?” 84(2) The Bar Examiner 35–48 (June 2015). (Go back)

- Mark A. Albanese, PhD, “The Testing Column: July 2019 MBE: Here Comes the Sun; August 2019 MPRE: Here Comes the Computer,” 88(3) The Bar Examiner 33–35 (Fall 2019). (Go back)

- For a graph with this LSAC data and more, visit https://report.lsac.org/View.aspx?Report=HistoricalData&Format=PDF. (Go back)

- Joanne Kane, PhD; Douglas R. Ripkey, MS; and Mengyao Zhang, PhD, “The Testing Column: Q&A: Using MPRE Scores to Predict Bar Passage. (Go back)

- Hon. Scott Bales, “Is Sooner Sometimes Better than Later? Arizona’s Early Bar Exam,” 86(1) The Bar Examiner 50–55 (March 2017). (Go back)

- James G. Leipold and Judith N. Collins, “The Entry-Level Employment Market for New Law School Graduates 10 Years After the Great Recession,” 86(4) The Bar Examiner 8–16 (Winter 2017–2018). (Go back)

Joanne Kane, PhD, is Associate Director of Psychometrics for the National Conference of Bar Examiners.

Joanne Kane, PhD, is Associate Director of Psychometrics for the National Conference of Bar Examiners.

This article originally appeared in The Bar Examiner print edition, Winter 2024-2025 (Vol. 93, No. 4), pp. 29–32.

Contact us to request a pdf file of the original article as it appeared in the print edition.